Introduction: Why Accountability Matters in AI

Artificial Intelligence is shaping industries, powering everyday apps, and even making decisions that affect people’s lives. Yet, every time an AI system fails-whether it’s a biased hiring algorithm, a faulty medical recommendation, or a misinformation-fueled chatbot-the big question comes up: “Who is responsible?”

That’s the elephant in the room. AI is powerful, but trust in it is shaky. Users, regulators, and even employees hesitate to embrace it fully because accountability is often vague. The truth is simple: genuine accountability only begins when someone is made truly responsible for the outcomes.

This article explores how assigning named ownership at every stage of AI projects-be it the product owner, ethics officer, or compliance lead-can restore trust and enforce ethical decision-making.

The Trust Deficit: Quick Evidence & Stakes

Why is AI facing a trust crisis? A few reasons stand out:

- Reputation Risks: High-profile failures lead to public backlash (e.g., biased image recognition).

- Legal Risks: Governments worldwide are drafting AI laws that demand named accountability.

- User Safety Risks: A single unchecked model can harm millions instantly.

- Market Risks: Investors are wary of funding products vulnerable to ethical scandals.

Without someone clearly responsible, AI risks looking like a “black box of excuses.”

What “Named Ownership” Actually Means

Named ownership means attaching a real person’s name to each critical decision, stage, or responsibility in an AI system’s lifecycle.

Think of it like signing your name on a contract-you suddenly take it more seriously. When people know their name is on the line, they’re less likely to cut corners. It’s not about punishment but about ensuring attention, care, and accountability.

Contrast that with the current “diffused responsibility” approach, where teams point fingers when things go wrong. With no one to hold accountable, trust collapses.

Key Named Roles Across the AI Lifecycle

Assigning named owners at every stage of the AI lifecycle ensures there is always a “face of responsibility.” This not only prevents finger-pointing but also builds trust by making accountability visible and traceable.

The Product Owner or Project Manager takes charge of defining the AI system’s purpose, scope, and deployment strategy. They own the product roadmap and its impact on users, balancing business goals with ethical obligations.

The Ethics Officer or AI Ethics Lead serves as the conscience of the project. Their role is to review models for bias, fairness, and unintended consequences. Importantly, they hold the authority to stop deployment if ethical red flags arise, ensuring that responsibility for ethical decisions is not sidelined.

The Compliance Lead or Legal Officer ensures that the system adheres to relevant laws, regulations, and industry standards. They are responsible for audit-ready documentation and act as translators between engineers, executives, and policymakers.

Meanwhile, operational reliability depends on specialized roles. The Data Steward guarantees datasets are accurate, unbiased, and responsibly sourced. The MLOps Lead manages version control, model updates, and system monitoring. Finally, QA Specialists stress-test models against edge cases to ensure stability and reliability.

Together, these roles form a safety net of accountability. Each name attached to a responsibility ensures that critical decisions are not left to chance, creating transparency, ethical rigor, and resilience throughout the AI lifecycle. This structure transforms accountability from theory into daily practice.

Why Assigning Responsibility Changes Outcomes

Named ownership isn’t just a bureaucratic checkbox-it fundamentally shifts behavior and results.

Psychological & Incentive Effects

When a role is named:

- People take decisions more seriously (no hiding behind “the team”).

- Risky shortcuts are reduced-because reputations are at stake.

- Transparency builds confidence for both insiders and outsiders.

Think of it like flying a plane: you wouldn’t want “a group of people” piloting. You want a captain.

Practical Mechanisms to Attach Names to Decisions

How do you make named ownership real instead of symbolic? Through structured processes.

RACI & Responsibility Matrices

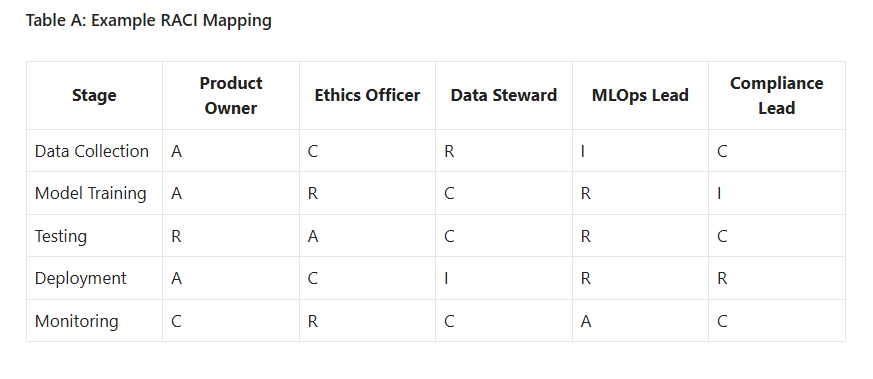

A RACI Matrix (Responsible, Accountable, Consulted, Informed) clarifies who does what.

A = Accountable, R = Responsible, C = Consulted, I = Informed

Technical Tooling

- Immutable audit logs to track who approved what.

- Signed pull requests (PRs) in code repositories.

- Versioned datasets with steward signatures.

Organizational Design

- Train staff on their accountability role.

- Create escalation paths (so ethics officers aren’t ignored).

- Align incentives-performance reviews should reflect ethical handling, not just speed.

Case Studies & Hypothetical Scenarios

Let’s see how named ownership changes outcomes.

Scenario A – Recommendation Algorithm Causes Harm

- Without named ownership: Confusion, finger-pointing, slow response. Users suffer, trust collapses.

- With named ownership: Product Owner communicates openly, Ethics Officer investigates bias, MLOps lead patches system. Faster fix, clearer accountability, reduced fallout.

Scenario B – Post-Deployment Bias Found

- Anonymous system: Company faces lawsuits, regulators step in.

- Named ownership system: Ethics Officer halts deployment, Data Steward identifies biased samples, fix is documented and shared transparently.

Governance & Legal Intersection

Assigning names aligns with regulatory and governance trends.

Regulatory Context

- GDPR: Requires a Data Protection Officer (named).

- EU AI Act: Demands a named responsible person for compliance.

- ISO AI Standards: Encourage traceability and accountability.

Contracts, Liability, and Insurance

Named roles simplify contracts: if a model fails, liability trails are clear. Insurance firms also favor companies with clear accountability structures.

Common Objections & Rebuttals

- Objection: “Won’t this create scapegoats?”

Rebuttal: No, accountability is shared but tracked. Named ownership adds clarity, not blame games. - Objection: “It’ll slow innovation.”

Rebuttal: Actually, it prevents costly failures. Fast but reckless innovation often kills trust. - Objection: “Too complex for startups.”

Rebuttal: Even small teams can assign simple named roles (e.g., one person wearing two hats).

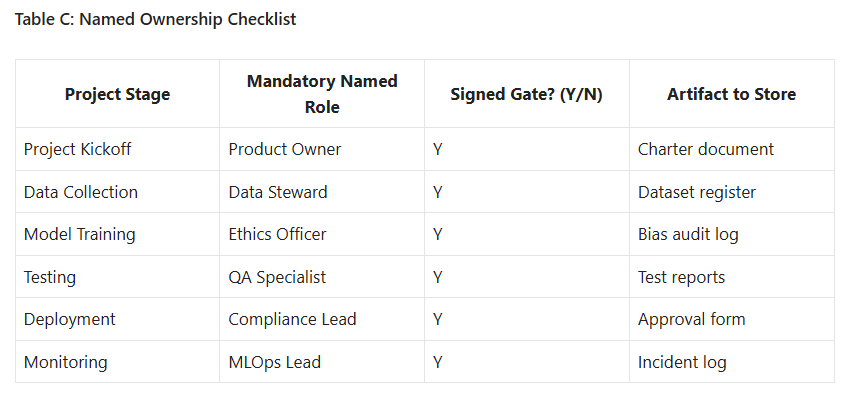

Practical Playbook: Step-by-Step Guide to Implement Named Ownership

Here’s a simple roadmap to adopt named ownership.

Follow this and accountability becomes systematic, not optional.

Measuring Success: KPIs That Show Trust Is Improving

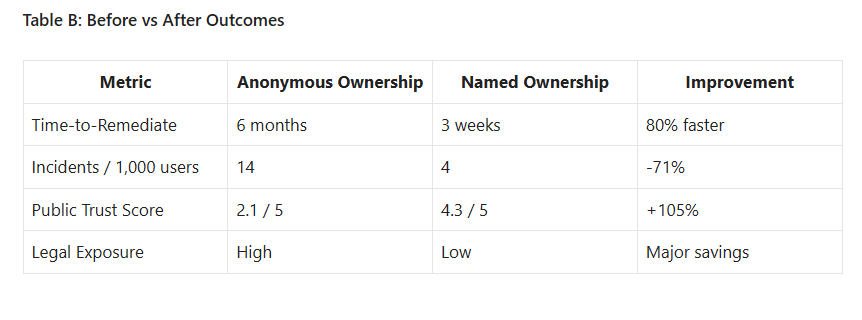

Accountability in AI isn’t just about assigning names-it’s about proving that trust is improving over time. The best way to do this is through Key Performance Indicators (KPIs) that capture both quantitative and qualitative outcomes.

On the quantitative side, organizations should track remediation times when issues arise. A shorter gap between problem detection and resolution shows that named owners are taking swift action. Similarly, a reduction in the number of incidents per deployment cycle signals that oversight is working. Higher compliance pass rates during internal or external audits also provide concrete evidence that accountability measures are translating into reliable systems.

On the qualitative side, softer trust indicators matter just as much. Regular user trust surveys can reveal whether customers feel safer using AI-powered tools. Positive shifts in media coverage and analyst reviews can indicate a stronger public reputation. Internally, improvements in employee morale and retention rates often reflect confidence in the fairness and clarity of accountability structures.

When both trust metrics rise together-fewer incidents and happier users-it’s a clear sign that accountability frameworks are not only working but also delivering business value.

Scaling Named Ownership Without Killing Innovation

Scaling accountability depends on organizational size. Startups can take a lightweight approach, with one person covering multiple roles while still documenting ownership. Enterprises require a structured setup with dedicated officers, approval workflows, and compliance checkpoints. A hybrid approach works best for many-leveraging digital tools to automate audit trails, simplify sign-offs, and minimize bureaucracy while keeping innovation fast and agile.

The message is clear: accountability and innovation are not enemies-they’re partners in building lasting trust.

Think of it like adding seatbelts to cars-you don’t stop driving, you just drive safer.

Conclusion: From Names to Culture – Making Accountability Sticky

AI won’t gain widespread trust unless it becomes clear who is responsible. Named ownership transforms accountability from theory into practice. It forces ethical decision-making, improves transparency, and reduces harm.

Assigning a name at each stage-from Product Owner to Ethics Officer-doesn’t just check a regulatory box. It builds a culture of care. And when culture changes, trust follows.

FAQs

Q1: How is named ownership different from shared responsibility?

Named ownership means one person is clearly accountable, while shared responsibility often leads to blurred lines and no clear action when problems arise.

Q2: Can startups implement named ownership with limited staff?

Yes. A single person can wear multiple hats (e.g., Product Owner + Ethics Lead) as long as responsibilities are explicitly documented.

Q3: What’s the first step for companies adopting this model?

Start with a simple RACI matrix for your AI projects. Assign one named person for each critical stage, then scale as the team grows.