If you’ve ever typed a question into ChatGPT or crafted a command for Midjourney, then congratulations, you’ve participated in the world of prompt design. But there’s a whole other layer to this process known as prompt engineering, and it’s what transforms those simple instructions into powerful, repeatable, scalable results across industries. The distinction may sound subtle at first, but the depth of their differences is huge, especially if you’re building or optimizing AI systems for real-world use.

With generative AI tools taking center stage in everything from SEO to customer service, understanding how to properly communicate with these models is no longer optional, it’s essential. Whether you’re a content creator trying to get better outputs or a developer embedding AI into enterprise workflows, the line between prompt design and prompt engineering could define the success of your work.

In this article, we’ll break down these two essential concepts and how they differ in terms of scope, goal, technique, and technical depth. We’ll explore how Google defines both terms, highlight some real-world use cases, and provide actionable takeaways for creators, developers, and marketers alike.

Introduction To Prompt Design

Definition and Primary Goal

Prompt design is the art of crafting input text in a way that elicits the best possible response from a generative AI model. It focuses on how you ask the question not just what you’re asking.

According to Google Cloud’s Generative AI documentation: “Prompt design is the process of creating prompts that elicit the desired response from a language model.”

The primary goal of prompt design is clarity and specificity. Whether you’re asking the AI to write code, summarize a blog post, or simulate a character in a video game, you need to guide it with a well-structured and intention-rich prompt.

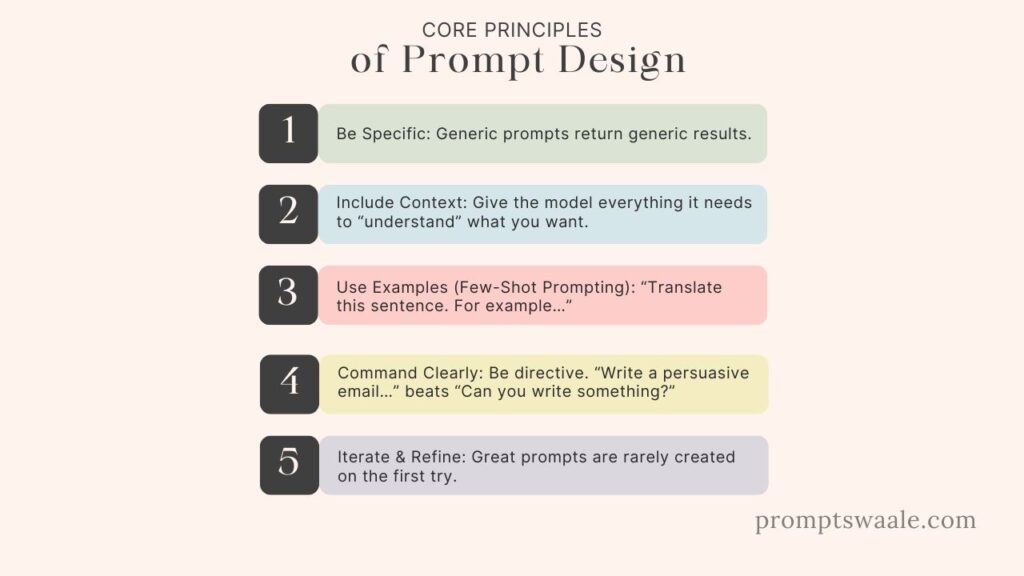

Core Principles of Prompt Design

- Be Specific: Generic prompts return generic results.

- Include Context: Give the model everything it needs to “understand” what you want.

- Use Examples (Few-Shot Prompting): “Translate this sentence. For example…”

- Command Clearly: Be directive. “Write a persuasive email…” beats “Can you write something?”

- Iterate & Refine: Great prompts are rarely created on the first try.

Prompt design is particularly effective for use cases like content creation, email marketing, product descriptions, and idea generation.

Tools and Strategies for Effective Prompt Design

Some of the most popular tools for prompt designers include:

- ChatGPT’s custom instructions

- Prompt engineering plugins (AIPRM, PromptHero)

- Midjourney’s image prompt tools

- Google Bard’s tone adjustment prompts

Even simple templates like: “You are a [role]. Act as if [scenario]. Your task is to [goal]…” …can massively improve AI output quality.

But as good as prompt design can be, it still only addresses the interface layer of AI. To go deeper to scale, systematize, and optimize- you need prompt engineering.

Introduction To Prompt Engineering

Definition and Broader Implications

Prompt engineering is a more technical and comprehensive discipline. It refers to the design, testing, optimization, and deployment of prompts within structured AI workflows and applications. This includes not just writing good prompts but managing how those prompts work under the hood across large-scale systems.

According to Opace and Medium: “The iterative process of refining prompts and evaluating the model’s responses is often called prompt engineering.”

Unlike prompt design, which focuses on one prompt at a time, prompt engineering involves:

- Creating modular prompts

- Automating testing frameworks

- Integrating retrieval-augmented generation (RAG)

- Fine-tuning outputs using APIs

- Controlling costs and response lengths

Prompt Engineering in Real-World Systems

Take a customer support chatbot. A simple prompt design might say: “Answer this user question politely and provide a solution.”

A prompt engineering approach would:

- Identify intent,

- Pull relevant data from a knowledge base,

- Adjust tone based on user history,

- Add constraints to ensure safety,

- And log the entire interaction for retraining purposes.

Techniques and Technical Depth

Advanced prompt engineering involves:

- Chain-of-thought prompting (forcing the model to reason step-by-step)

- Self-reflection prompts (asking the model to critique its own answer)

- Prompt tuning and embedding-based optimization

- Latent memory and agent-based control systems

It’s a field that merges AI research, software engineering, and user experience design. And as AI systems become more complex, the demand for expert prompt engineers is skyrocketing.

Key Differences Between Prompt Design and Prompt Engineering

1. Scope and Scale

At its core, prompt design is task-specific, while prompt engineering is system-wide. Let’s break that down.

Prompt design helps you get a better response now-for a single interaction or content creation task. You might adjust your prompt for tone, clarity, or audience fit. That’s it. The scope is typically one-on-one interaction with the AI.

Prompt engineering, however, is built for scale. It’s not just about one user or one query. It’s about:

- Systemizing how prompts work across multiple users

- Deploying prompts within applications, APIs, or backend workflows

- Managing multiple variations, fallback prompts, and routing logic

In other words, where prompt design is a tool for creators, prompt engineering is a tool for builders. Think of prompt design as writing a killer sentence. Prompt engineering is designing an entire language processing pipeline.

2. Skill Sets Involved

Prompt design requires:

- Writing clarity

- Empathy

- Attention to detail

- Creativity

Prompt engineering demands:

- Understanding of AI model architecture

- Skills in APIs and programming languages (e.g., Python, Node.js)

- Ability to implement token control, truncation, and safety mechanisms

- Use of tools like LangChain, Pinecone, or Vector Search databases

This contrast in skills is what separates prompt engineers from general AI users. The two roles often collaborateone focuses on what to say, the other ensures it works under real-world constraints.

3. Output Optimization vs. System Integration

Prompt design is mostly concerned with output quality. Is the text good? Is it helpful? Does it sound right?

Prompt engineering is concerned with much more:

- Latency: How fast is the AI responding?

- Cost: Are we paying too much in tokens?

- Security: Are we leaking sensitive info?

- Accuracy: Are we hallucinating facts?

These aren’t just questions about the model, they’re engineering problems. Prompt engineering embeds prompts into a production-ready framework with data pipelines, real-time testing, and fallback mechanisms.

Google’s Perspective on Prompt Design vs Prompt Engineering

Quoting Google’s Official Definition

Google’s own documentation offers a useful contrast:

“Prompt design is the process of creating prompts that elicit the desired response from a language model. … The iterative process of refining prompts and evaluating the model’s responses is often called prompt engineering.” (cloud.google.com)

So from Google’s perspective:

- Prompt Design = Crafting initial prompt structure.

- Prompt Engineering = Continuous testing, tweaking, and deploying those prompts at scale.

This quote is important because it shows that even tech giants treat them as distinct but complementary practices.

Industry Usage According to Google and OpenAI

Both Google and OpenAI emphasize the need for structured prompting as AI systems evolve. For instance:

- OpenAI recommends few-shot and chain-of-thought prompting for more accurate outputs.

- Google’s Vertex AI team encourages designers to experiment with prompt variants, token limits, and dynamic prompts.

Their joint perspective? Prompting is a development discipline, not just a content trick. It’s about building robust AI behaviors through clever, structured input.

What Google Developers Recommend

Here’s what Google suggests developers do:

- Use prompt templates with placeholders (e.g., {{input_text}})

- Apply weighting techniques for multi-input prompts

- Add guardrails for safety (like refusing to answer harmful queries)

- Always evaluate prompts against output benchmarks

They also promote evaluation pipelines to test how a prompt performs under multiple contexts, something beyond the scope of simple prompt design.

Real-World Use Case Examples

Prompt Design in Content Creation

Let’s take a blog writer or YouTuber. They need AI to generate:

- Video scripts,

- Social captions,

- Meta descriptions,

- Blog posts.

A good prompt might look like:

“Write a friendly introduction for a blog about eco-friendly travel tips, in less than 100 words.”

This is a clear case of prompt design: the goal is to generate something creative and useful. The design mattersbut it’s limited to a single interaction.

Prompt Engineering in Customer Support Bots

Now imagine an AI bot used by a SaaS company. It needs to:

- Answer customer questions,

- Pull data from help docs,

- Adapt tone based on frustration level,

- Work across 5 languages.

This requires prompt engineering, where you:

- Route queries via a classifier,

- Dynamically build prompts with retrieved content,

- Limit responses to 100 tokens (to save cost),

- Include fallback prompts if no answer is found.

It’s a complete AI pipeline, not just a clever sentence.

Comparison in Productivity Tools

Take tools like Notion AI or Grammarly GO. They use:

- Prompt design for writing-style controls.

- Prompt engineering to make features like “Rewrite this more politely” consistent across users and content types.

In both cases, the end user never sees the prompt-but engineering teams are tweaking, optimizing, and tracking results behind the scenes.

The Role of Iteration in Both Disciplines

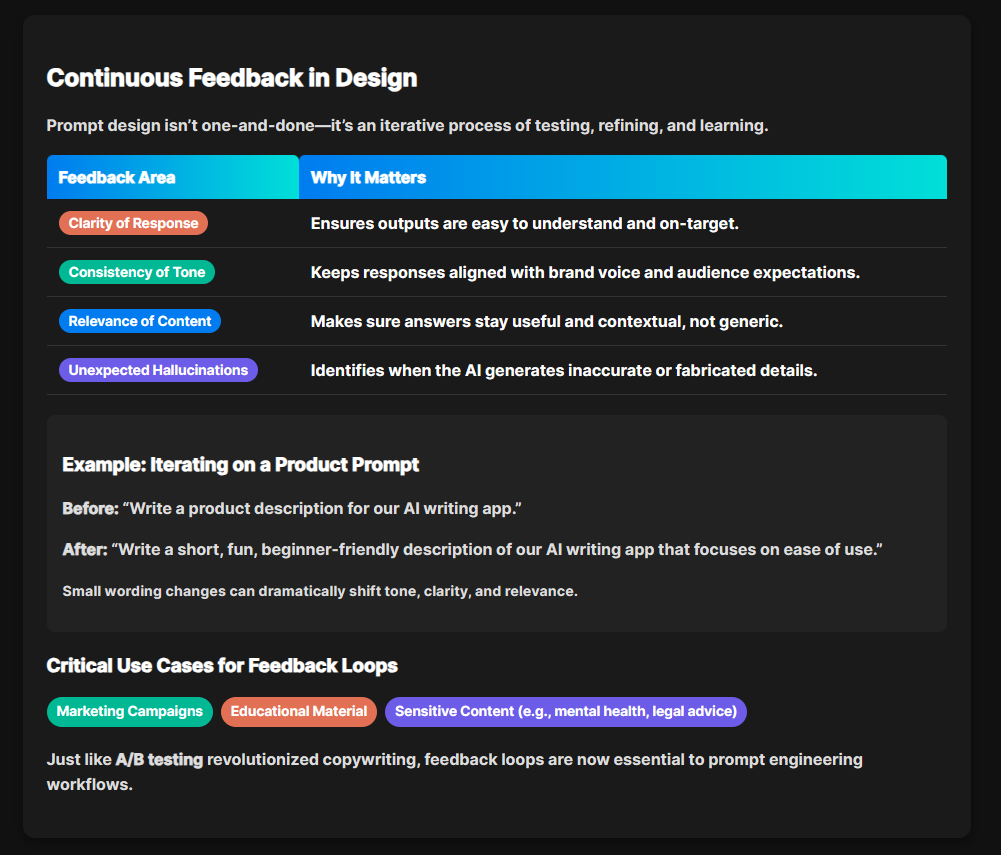

Continuous Feedback in Design

Prompt design isn’t a one-and-done deal. It’s iterative. Every prompt is a test, and every output is feedback. Smart designers keep refining prompts based on:

- Clarity of response

- Consistency of tone

- Relevance of content

- Unexpected hallucinations

For example, if you’re using ChatGPT to write product descriptions and it’s giving overly technical language, you tweak the prompt:

From: “Write a product description for our AI writing app.”

To: “Write a short, fun, beginner-friendly description of our AI writing app that focuses on ease of use.”

You test, tweak, test again. That’s the loop prompt designers live inconstantly learning how small wording changes create big differences.

This feedback loop is especially critical when the prompt is for:

- Marketing campaigns

- Educational material

- Sensitive content (e.g., mental health, legal advice)

Tools like A/B testing in copywriting are now being adopted into prompt design workflows, proving just how essential iteration has become.

Prompt Testing Frameworks in Engineering

Prompt engineering takes this idea of iteration to the next level, with frameworks.

Engineers run automated tests on:

- Response length

- Cost per request

- Token usage

- Output bias

- Fact-checking accuracy

Let’s say you’re using a retrieval-based AI model that searches your internal knowledge base and builds a dynamic prompt. A good engineer would:

- Test different retrieval depths (how much info to pull)

- Adjust chunk sizes (how long each paragraph is)

- Add few-shot examples if the response is too generic

- Log prompt-response pairs for further tuning

Popular prompt testing tools and libraries include:

- PromptLayer

- LangChain eval

- OpenAI’s function calling

- Helicone

All of this shows that prompt engineering is software development, while prompt design is more like user experience design. Both iterate, but in very different ways.

Tools and Platforms for Prompting

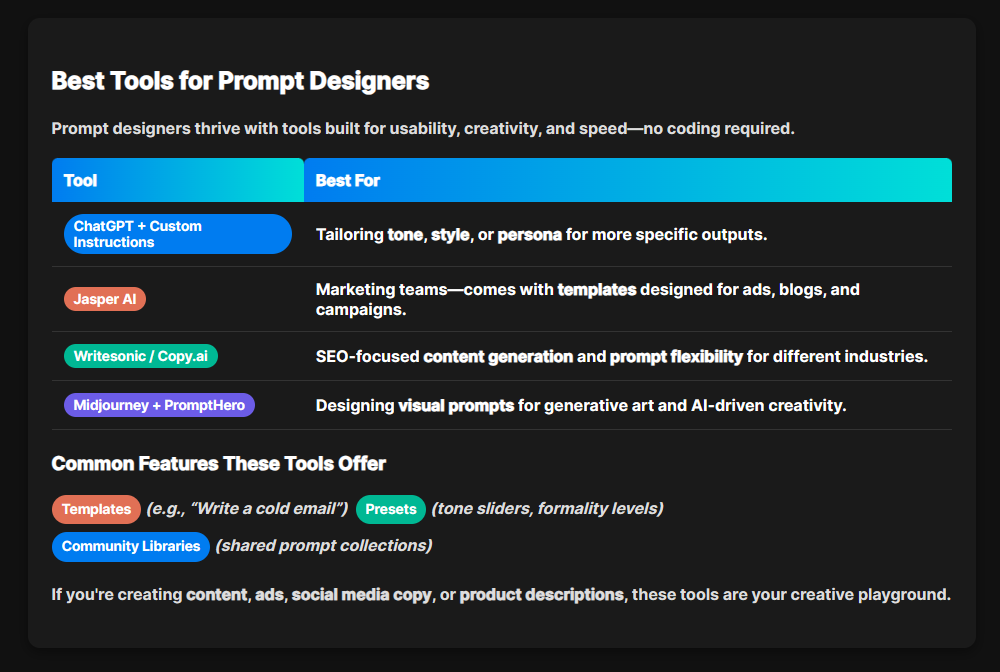

Best Tools for Prompt Designers

Prompt designers don’t necessarily need coding skills. They work with tools that emphasize usability, creativity, and speed. Some of the top tools include:

- ChatGPT + Custom Instructions: Great for tailoring tone or persona.

- Jasper AI: Built for marketers with built-in templates.

- Writesonic / Copy.ai: SEO-focused writing tools that support varied prompt styles.

- Midjourney + PromptHero: For visual prompt design in generative art.

These platforms often include:

- Templates (“Write a cold email”)

- Presets (tone sliders, formality levels)

- Community prompt libraries

If you’re creating content, ads, social media copy, or product descriptions, these tools are your playground.

Technical Stacks for Engineers

Prompt engineers need more than templates. They need flexible backends, logging capabilities, and data-driven prompt management.

Here’s what’s often in a prompt engineering stack:

- LangChain: Chains together prompts and tools for dynamic workflows.

- Vector databases (Pinecone, Weaviate): Store and retrieve relevant documents for RAG pipelines.

- LLM evaluation tools: Judge output quality programmatically.

- OpenAI API / Anthropic API: For embedding and deploying prompts.

They may also build prompt orchestration layers that adjust or replace prompts depending on user context, data freshness, or budget limits.

AI Prompt Testing Environments

Both designers and engineers benefit from controlled environments to test and iterate. Some examples:

- Prompt Layer: Visualize prompt history and responses.

- Reka.ai: Real-time comparison of prompt effectiveness.

- Eval frameworks (Lang Smith, Prompt Foo): Grade outputs across models.

These environments ensure repeatability, auditability, and scale, especially useful when prompting is central to your AI product or service.

Benefits and Challenges

Advantages of Good Prompt Design

A well-designed prompt can:

- Improve the quality of content output by 10x

- Save time and cost on editing and rewriting

- Align AI tone with brand voice

- Increase user satisfaction in AI-driven apps

It’s no exaggeration to say that a single sentence can make or break your outcome with AI. Prompt design is where clarity meets creativity-and it rewards those who test thoroughly.

Challenges Faced in Prompt Engineering

Prompt engineering brings scale, but it also brings complexity. Common challenges include:

- Token Limits: API calls often have a max token cap.

- Latency: Slow responses are a killer in user-facing apps.

- Hallucinations: When AI confidently gives wrong info.

- Debugging: When dynamic prompts fail unpredictably.

- Cost Control: Long prompts = high costs.

Engineers must constantly monitor logs, user inputs, and AI behavior to catch these issues early. It’s not just prompting- it’s infrastructure-level reliability.

Overcoming Limitations

To tackle these issues, engineers and designers collaborate:

- Designers simplify and clarify language to reduce tokens.

- Engineers use caching, summarization, or dynamic prompts.

- Testing teams flag inaccurate outputs and tune system prompts.

Together, they balance creativity and control, crafting a user-friendly AI that performs at scale.

Conclusion

Prompt design and prompt engineering may seem like two sides of the same coin, but they serve very different purposes. Prompt design is your front-line communicator- clear, concise, and creative. Prompt engineering, on the other hand, is the back-end architect building scalable, reliable, and cost-effective systems around those prompts.

In short:

- Prompt design is what you say to the AI.

- Prompt engineering is how that saying scales, performs, and integrates into the bigger system.

As AI continues to dominate industries from marketing to medicine, understanding both roles isn’t just helpfulit’s essential.

FAQs

1. What makes a good prompt design?

A good prompt is specific, clear, context-rich, and outcome-focused. It should guide the AI toward a desired tone, structure, or action.

2. Can I become a prompt engineer without coding?

You can start with no code, but to engineer prompts at scale (especially for apps), you’ll need to learn tools like APIs, LangChain, or data pipelines.

3. Do prompt engineers use ChatGPT?

Yes! Many engineers use ChatGPT to prototype, test outputs, and even build prompt libraries. It’s often the starting point for more technical applications.

4. What are examples of bad prompt design?

Vague commands like “Tell me something interesting” often result in irrelevant or generic responses. Lack of structure or clarity kills quality.

5. Is prompt engineering a long-term career?

Absolutely. As AI systems grow more complex, prompt engineers will be in high demand across tech, healthcare, law, and more.