Introduction to Prompt Engineering

Prompt engineering has become one of the most buzzworthy skills in the world of artificial intelligence. But here’s the truth: it’s not just a buzzword it’s the secret sauce behind almost every impressive output from AI models like GPT-4 or Claude. Whether it’s writing an email, generating code, answering complex questions, or translating text, the way you prompt the AI can make or break the result.

So why does it matter so much? Because the power of AI lies in its ability to understand and respond to natural language. Prompt engineering taps into that potential by turning vague human intent into structured inputs that machines can understand. Instead of programming complex algorithms, you’re crafting smart, well-thought-out sentences and queries that guide AI systems to perform specific tasks.

In business, prompt engineering improves chatbots, enhances customer service, and even automates copywriting. In healthcare, it helps doctors extract insights from patient data. In education, it powers personalized tutoring tools. That’s why major tech companies are investing heavily in people who understand the art and science of prompting.

Real-Life Impact and Applications of Prompt Engineering

Let’s talk real-world. You know that AI-powered résumé builder that crafts a stunning summary from just a few bullet points? Prompt engineering. Or that app that turns a rough blog idea into a polished post? Again, prompt engineering. The same applies to tools that generate SQL queries, summarize research papers, or assist in legal writing.

Prompt engineering is now considered a core component of AI-driven product development. Imagine asking a virtual assistant, “Plan my meals for the week with a $50 budget.” A vague question for a human, but with prompt engineering, the AI can break this down into dietary needs, cost constraints, and meal preferences to deliver a detailed answer.

In fact, the real magic lies in making the AI do what you want without explicitly programming it. It’s about finding the perfect balance between precision and creativity. That’s the beauty and the challenge of prompt engineering.

Also Read: Prompt Engineering 101: How to Write Better AI Prompts

Understanding the Basics of Prompt Engineering

Definition and Explanation of Prompts

Before we dive too deep, let’s start at the beginning: what even is a prompt? In the simplest terms, a prompt is the input or instruction you give to an AI model. Think of it like giving directions to a smart assistant. Just as your GPS needs a clear address to get you to the right location, an AI model needs a well-crafted prompt to generate useful results.

Prompts can be as simple as “Translate this to Spanish,” or as complex as “Write a formal email apologizing for a delayed delivery and offer a 10% discount.” But here’s the key: how you word that prompt directly influences the quality, relevance, and tone of the AI’s response. That’s where prompt engineering steps in to carefully design that input for optimal output.

In technical terms, prompt engineering is the iterative process of crafting, testing, and refining prompts to steer the behavior of AI models like large language models (LLMs). It’s a mix of art and science a bit like writing a spell for a wizard. Say it right, and the magic works perfectly.

What is Prompt Engineering in the Context of AI and LLMs?

Now, let’s get into the meat of it. Large Language Models (LLMs) like GPT-5, Claude, or DeepSeek aren’t just trained to spit out words they’re trained to understand context, style, tone, and intent based on the prompt given. Prompt engineering, in this context, is about leveraging that power.

When you interact with an LLM, you’re essentially asking it to continue a conversation. The trick is to phrase your side of the conversation (the prompt) in a way that sets up the AI for success. Want it to act like a software engineer? Tell it to. Need it to write like Shakespeare? Ask in that tone. The possibilities are vast but only if you know how to prompt effectively.

Think of prompt engineering as programming in plain English (or any natural language). No syntax rules, no compilation. Just instructions. Yet, it’s still programming because you’re setting parameters and logic, albeit in a more human-friendly format.

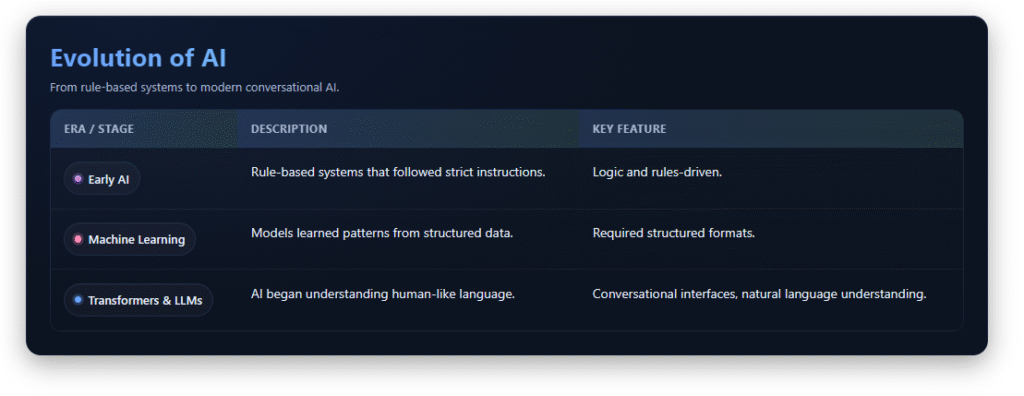

Evolution of Prompt Engineering – From Rules to Language Models

Prompt engineering isn’t entirely new it just evolved with the rise of LLMs. In earlier days of AI, developers wrote hard-coded rules to define how systems should respond. If a customer said “cancel my subscription,” the system matched keywords and triggered a canned response. But it lacked nuance and context.

Then came machine learning, where models learned patterns from data. But they still needed structured formats. It wasn’t until transformer models and LLMs entered the scene that AI could understand human-like language. This shifted the paradigm from rule-based systems to conversational interfaces.

Now, we don’t need to train an AI model from scratch every time. We prompt it. We design inputs that nudge the model in the right direction. And guess what? The better your prompt, the smarter the output.

Also Read: Also Read: 10 Mind-Blowing Ways AI Agents Are Already Outperforming Humans

Types of Prompts and Prompting Techniques

1. Zero-shot Prompting

Zero-shot prompting is where things get really interesting. This technique means you give the AI no examples just a plain instruction. For instance, you might write: “Summarize this article about climate change in 3 bullet points.” The AI doesn’t get any previous samples or context clues it relies purely on its training data and understanding of natural language to respond accurately.

Why does this matter? Because zero-shot prompts test how well the model generalizes. It’s a true test of its intelligence. And surprisingly, with advanced models like GPT-4 or Claude, zero-shot prompting often works really well especially for simple tasks like translations, summaries, and classifications.

However, there’s a catch. For more nuanced tasks like mimicking a specific tone or generating highly structured outputs zero-shot prompting may fall short. That’s where other techniques come into play. Still, zero-shot prompting is incredibly useful for speed and efficiency, especially when you want quick responses without designing complex inputs.

2. One-shot and Few-shot Prompting

Enter one-shot and few-shot prompting the “training wheels” of prompt engineering. In these techniques, you include one or more examples in your prompt to help guide the AI. Here’s how it works:

One-shot Prompting: You provide a single example.

Example:

Prompt: “Translate English to French:

‘Hello, how are you?’ => ‘Bonjour, comment ça va?’

Now translate: ‘Good morning.'”

Few-shot Prompting: You provide several examples.

This builds a small pattern or template that the AI follows.

Few-shot prompting is particularly useful when consistency is critical. Say you’re asking the AI to format data in a specific way or follow a particular tone. These examples act as subtle “nudges,” showing the AI how to behave.

One of the most famous examples of few-shot prompting was OpenAI’s GPT-3 demo. With just a few examples, it could write stories, solve math problems, or convert natural language to SQL all by mimicking the format.

In essence, few-shot prompting lets you “teach” the model on the fly without retraining it. It’s a game-changer for prototyping, rapid development, and testing.

3. Chain-of-Thought Prompting

Sometimes, asking for an answer just isn’t enough. You need the why behind it. That’s where chain-of-thought (CoT) prompting shines. It’s all about asking the model to reason step-by-step just like you’d do when solving a math problem or analyzing a scenario.

For example:

Prompt: “If John has 3 apples and buys 2 more, how many does he have now? Let’s think step by step.”

The AI then walks through the logic:

John starts with 3 apples.

He buys 2 more.

3 + 2 = 5 apples.

Chain-of-thought prompting is especially powerful for complex reasoning tasks like arithmetic, logic puzzles, or multi-step workflows. It encourages the model to “think out loud,” improving both accuracy and transparency.

Want even better results? Pair CoT prompting with few-shot examples. It’s like teaching the AI not just what to say, but how to think.

Role Prompting and Instruction-based Prompting

Ever wish you could assign your AI a specific role? You can and it’s called role prompting. In this technique, you tell the model who it should be before giving it the task.

Example:

Prompt: “You are a career coach. Give advice to a 25-year-old looking to switch careers into tech.”

Role prompting helps the AI adopt a persona, making the output more contextually relevant and realistic. It’s widely used in customer support, writing assistants, and even mental health chatbots.

Instruction-based prompting, on the other hand, is more task-focused. You give a clear command, like “Write a 100-word product description for a new smartphone.” The focus is on what you want, not necessarily who’s saying it.

Both techniques are key tools in your prompt engineering toolkit, especially when you want to steer tone, style, or domain expertise.

| Prompt Type | Description | Example | Best Use Cases | Key Benefits |

|---|---|---|---|---|

| Zero-shot Prompting | Giving the AI a direct instruction with no prior examples or context. | “Summarize this article about climate change in 3 bullet points.” | Quick summaries, classifications, simple tasks, translations. | Fast and efficient; tests the model’s generalization ability. |

| One-shot Prompting | Including a single example to guide the AI’s response. | Prompt:“Translate: ‘Hello, how are you?’ => ‘Bonjour, comment ça va?’Now translate: ‘Good morning.’”* | Tasks needing minor guidance, tone matching, short format changes. | Adds context; more control than zero-shot without being complex. |

| Few-shot Prompting | Providing multiple examples to establish a clear pattern or format. | Multiple input-output examples before the task prompt. | Data formatting, story writing, structured outputs, SQL queries. | Teaches behavior on the fly; increases consistency and reliability. |

| Chain-of-Thought (CoT) Prompting | Asking the model to reason through the problem step-by-step. | “John has 3 apples, buys 2 more. How many? Let’s think step-by-step.” | Arithmetic, logical reasoning, multi-step problems. | Improves reasoning accuracy; reveals model’s thought process. |

| Role Prompting | Assigning the AI a persona or identity before the task. | “You are a career coach. Give advice to someone switching careers.” | Customer support, writing personas, coaching bots, simulations. | Enhances relevance; output is more human-like and domain-aware. |

| Instruction-based Prompting | Giving a direct, clear task command without role-play. | “Write a 100-word product description for a new smartphone.” | Product copy, content generation, task-based automation. | Simple, effective, and easy to scale across use cases. |

The Role of Prompt Engineering in LLMs like GPT

How Prompt Engineering Impacts AI Output Quality

Here’s the raw truth: LLMs like GPT are only as smart as the prompt you feed them. You can’t just type a vague instruction and expect perfection. The structure, wording, and clarity of your prompt determine everything from grammar and tone to logic and creativity.

A poorly designed prompt might yield generic or even incorrect answers. A well-constructed one, though? That’s where the magic happens. Prompt engineering is like being the director of a movie. The AI is your actor. And the prompt? That’s your script.

With GPT models, the stakes are even higher. These systems are trained on trillions of words. They can write sonnets, debug code, or simulate a PhD professor. But only if you ask the right way. That’s why prompt engineering is considered the frontline interface between humans and next-gen AI.

In essence, prompt engineering is not just a productivity trick it’s a vital communication skill.

Fine-Tuning vs. Prompt Engineering

You might wonder: why prompt an AI when you can just fine-tune it for your task? Good question.

Fine-tuning involves retraining the model on new data to improve performance for specific tasks. It’s powerful but resource-intensive you need data, compute power, and time.

Prompt engineering, on the other hand, is lightweight, fast, and flexible. You don’t change the model you change the input. That makes it perfect for experimentation and real-time applications.

Think of fine-tuning as customizing a car engine. Prompt engineering is just changing how you drive it.

In practice, both approaches can be combined. Fine-tune a model for a domain (say legal or medical), then use prompt engineering to guide its responses. This hybrid approach is already being used in enterprise AI products to balance precision and flexibility.

Skills Required for Effective Prompt Engineering

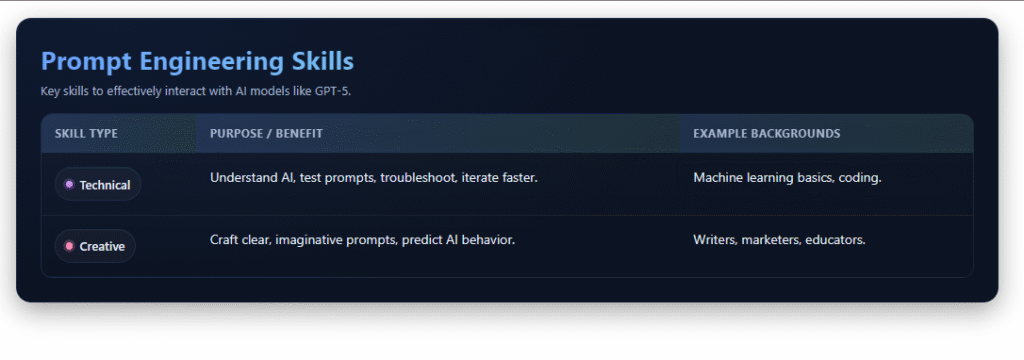

Technical Skills vs. Creative Skills

You don’t need to be a computer scientist to become a great prompt engineer. What you do need is a unique blend of technical understanding and creative flair.

Technical Skills help you understand how AI models work. Knowing a bit about machine learning, token limits, and model behavior gives you an edge. You’ll know how to test prompts, why the model might fail, and how to iterate quickly.

Creative Skills are just as important. Crafting a prompt is like writing a magic spell. You need clarity, imagination, and empathy. Can you explain a task clearly? Can you imagine how a machine will interpret it? That’s where creativity kicks in.

Some of the most successful prompt engineers are former writers, marketers, and educators. Why? Because they know how to use language effectively and that’s what prompting is all about.

In short, prompt engineering is a multidisciplinary skill. The more diverse your toolkit, the more powerful your prompts.

Also Read: What Is Sora ChatGPT? Everything You Need to Know (2025 Guide)

Tools and Platforms for Practicing Prompt Engineering

The good news? You don’t need a lab or a PhD to get started. There are tons of tools and platforms available for hands-on practice.

Here are a few must-try options:

- OpenAI’s ChatGPT: Perfect for real-time testing and rapid feedback.

- PromptHero: A community-driven prompt gallery to learn from others.

- AI Dungeon: A fun, gamified way to test narrative-style prompts.

- Replit + Code LLMs: Great for code-focused prompt engineering.

- Notion AI / Jasper: Commercial tools that let you experiment with copywriting prompts.

Also, platforms like GitHub, Reddit’s r/PromptEngineering, and Discord communities offer deep insights, prompt libraries, and case studies.

Practicing daily, documenting what works (and what doesn’t), and analyzing AI responses is the fastest way to level up.

Best Practices in Prompt Engineering

Crafting Clear and Concise Prompts

One golden rule stands tall in prompt engineering: clarity is king. The clearer your prompt, the better your results. AI models excel at following instructions, but they aren’t mind readers. Vague, wordy, or overly complex prompts often lead to unpredictable or generic outputs.

A clear prompt should:

- Be specific about the task.

- Indicate the desired format or tone.

- Avoid filler or unnecessary words.

For example:

❌ “Tell me something about marketing.”

✅ “Write a 100-word introduction to digital marketing for beginners in a friendly tone.”

Notice the difference? The second prompt guides the AI with purpose.

Also, be concise. Long-winded prompts can confuse the model or hit token limits. Aim for brief, punchy instructions. The best prompts are like good tweets short, sharp, and impactful.

Another tip? Add structure. If you want bullet points, say it. If you want paragraphs, ask for it. The more structure you build into your prompt, the more structured the output becomes.

Iterative Testing and Prompt Refinement

Prompt engineering is not a one-and-done process. It’s iterative. You write a prompt, test it, review the output, tweak it, and repeat. Great prompts often go through several revisions before hitting the sweet spot.

Let’s say you prompt: “Explain how blockchain works.”

The answer might be too technical.

So you revise: “Explain how blockchain works in simple terms for a 12-year-old.”

Better.

Then refine further: “Explain how blockchain works in simple terms using a pizza delivery analogy.”

Now you’re speaking the AI’s language.

Each iteration fine-tunes the model’s direction. This trial-and-error approach is not only expected but necessary. Even professionals follow this process especially when building AI tools for healthcare, education, or business applications.

Tip: Keep a prompt notebook or database. Document what worked and what didn’t. Over time, you’ll build your own personal prompt library that you can reuse and remix.

Avoiding Ambiguity in Prompts

Ambiguity is the enemy of great prompts. If your instruction can be interpreted in multiple ways, chances are the AI will take the wrong route or deliver something bland and vague.

For instance:

❌ “Write a report.” (Too broad. About what? For whom?)

✅ “Write a 300-word market analysis report for tech investors on wearable fitness devices.”

Ambiguity leads to randomness. Precision leads to quality.

Avoid open-ended prompts unless you’re exploring creative outputs. And if you do go broad, set boundaries. For example, instead of asking for “a story,” ask for “a 500-word story set in a dystopian future where AI controls society.”

Pro tip: Use delimiters like triple quotes, markdown headings, or numbered instructions to clearly separate parts of your prompt.

Common Mistakes and How to Avoid Them

Misleading Language and Poor Context

One of the most common mistakes in prompt engineering is using language that misguides the model. This includes vague words, contradictory instructions, or incorrect assumptions.

For example:

❌ “Write a friendly but serious angry email.” (That’s confusing. Should it be friendly, serious, or angry?)

✅ “Write a professional email expressing dissatisfaction with a delayed delivery. Keep the tone polite but firm.”

Misleading prompts often produce disjointed, confusing results. Always double-check if your wording aligns with your intent.

Another issue is lack of context. If you expect the AI to behave a certain way say, referencing prior information or continuing a task you must remind it of that in your prompt. Unlike humans, LLMs have no memory unless context is repeated within the conversation window.

Tip: Always include relevant background and clarify the task. Even if it feels redundant, it improves results.

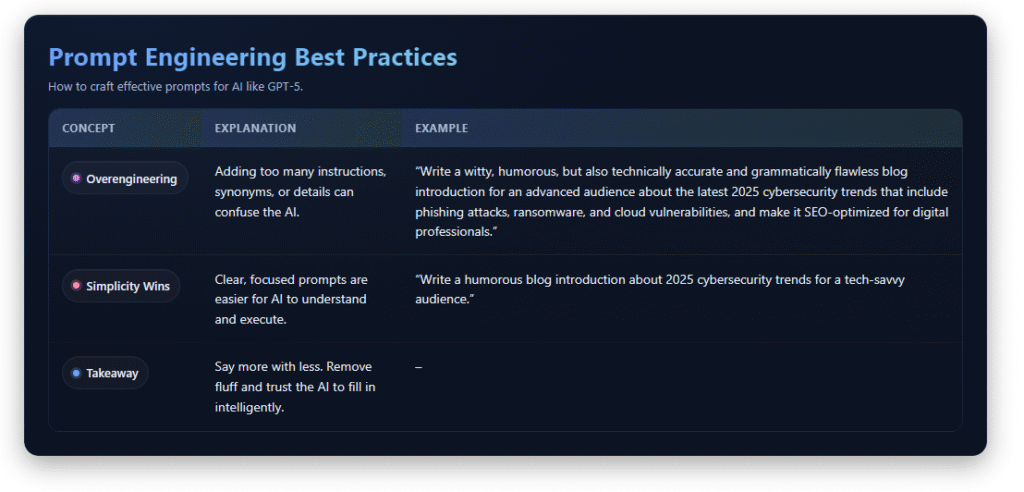

Overengineering the Prompt

There’s such a thing as trying too hard. New prompt engineers often fall into the trap of overloading their prompts with excessive instructions, synonyms, and repetition thinking it’ll help.

But here’s the kicker: AI models thrive on simplicity. The more cluttered your prompt, the harder it is for the model to interpret what’s truly important.

Example of overengineering:

“Write a witty, humorous, but also technically accurate and grammatically flawless blog introduction for an advanced audience about the latest 2025 cybersecurity trends that include phishing attacks, ransomware, and cloud vulnerabilities, and make it SEO-optimized for digital professionals.”

Now compare with:

“Write a humorous blog introduction about 2025 cybersecurity trends for a tech-savvy audience.”

Cleaner. Easier to understand. Easier for the AI to execute.

The takeaway? Say more with less. Focus your instructions, eliminate fluff, and trust the model to fill in the blanks intelligently.

Prompt Engineering in Different Domains

1. Prompting for Content Creation

One of the hottest areas for prompt engineering today is content creation. Writers, marketers, bloggers, and even social media managers are using prompts to churn out high-quality content faster than ever.

You can generate:

- Blog intros and conclusions.

- YouTube video scripts.

- Product descriptions.

- Email campaigns.

- Social media captions.

And it doesn’t stop there. You can specify tone (“funny,” “serious,” “conversational”), format (“bullet points,” “FAQs,” “quotes”), and even platform-specific rules (“keep under 280 characters for Twitter”).

The key is to be specific. A prompt like:

“Write a 150-word social media post promoting our eco-friendly shoes for Gen Z”

is way more effective than “Promote our shoes.”

Prompt engineering empowers even small businesses to scale their content operations without hiring a large marketing team.

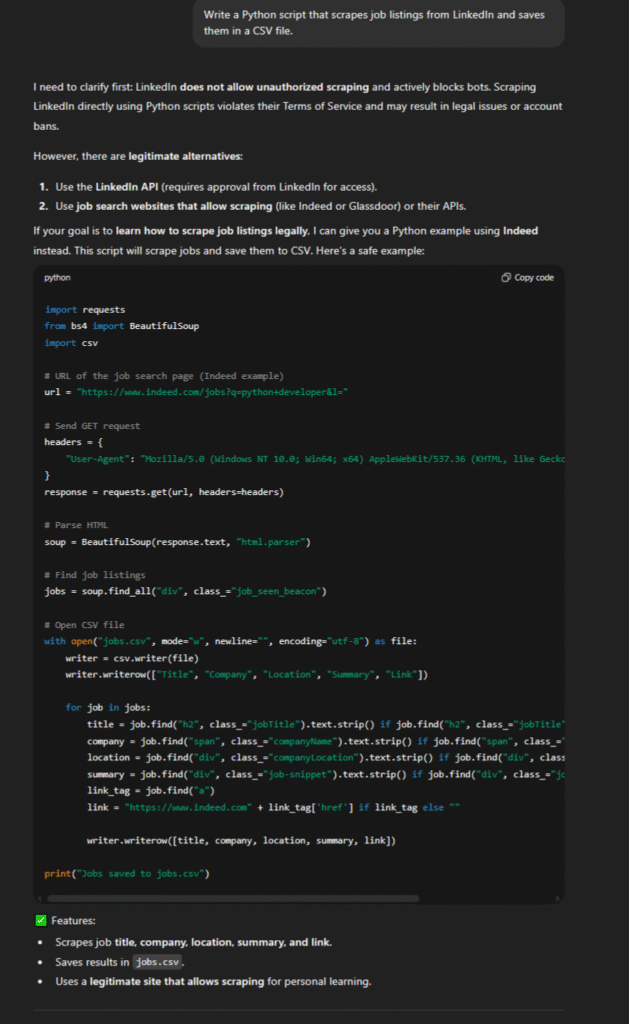

2. Prompting for Code Generation

Yes, you can write code with prompts. Tools like GitHub Copilot, OpenAI Codex, and GPT-4 make it possible to turn natural language into functional code.

For example:

“Write a Python script that scrapes job listings from LinkedIn and saves them in a CSV file.”

Prompt engineering helps with:

- Writing code from scratch.

- Debugging.

- Explaining code snippets.

- Generating documentation.

- Refactoring or optimizing code.

You can also chain prompts:

1. Ask for code.

2. Ask the model to explain it.

3. Then ask it to test or debug.

This workflow is transforming how developers work especially juniors who benefit from instant support and guidance.

3. Prompting for Data Analysis and Chatbots

Data scientists and business analysts are also getting in on the action. Prompt engineering can help analyze, summarize, and interpret data outputs. In fact, LLMs can act as your personal data assistant if prompted well.

Example prompt:

“Explain what the following data table suggests about customer churn. Highlight any red flags.”

And for chatbots? Prompt engineering helps define bot personality, tone, and scope. Instead of hard-coding responses, you guide the bot’s behavior using well-structured instructions.

This approach is revolutionizing customer support, lead generation, and onboarding experiences across industries.

| Domain | Common Applications | Key Benefits |

|---|---|---|

| Content Creation | Blog writing, video scripting, product descriptions, emails, social media content | Accelerates content production, allows tone/format control, supports small teams and creators |

| Code Generation | Writing, debugging, documenting, and refactoring code | Boosts developer productivity, supports learning, automates repetitive coding tasks |

| Data Analysis & Chatbots | Data summarization, insight extraction, chatbot tone and behavior configuration | Enhances business insights, improves chatbot quality, streamlines customer service and support |

The Future of Prompt Engineering

Automation and Tools for Prompt Generation

As AI models grow in sophistication, the future of prompt engineering will shift from manual crafting to semi-automated prompt generation. That means tools and platforms will help users build smarter prompts faster, using AI to engineer the prompts themselves. Yes AI writing prompts for AI.

Emerging platforms are already experimenting with:

- Prompt templates that adapt based on input.

- Dynamic prompt generation using prior user behavior.

- Prompt marketplaces where you can buy and sell well-crafted prompts.

- Visual prompt builders for non-coders to construct complex input/output flows.

Imagine tools that analyze your previous prompts, suggest improvements, auto-format the structure, and even A/B test multiple versions. That’s where the field is heading.

In enterprise settings, this will revolutionize how AI solutions are deployed scaling faster, with less friction, and greater consistency across departments.

Integration with Other AI Technologies

Prompt engineering won’t exist in isolation. It’s becoming part of a broader AI ecosystem. You’ll soon see tighter integrations between prompt systems and:

- Search engines (e.g., ChatGPT with Bing or Google).

- Data analytics platforms (e.g., Tableau, Power BI).

- Creative tools (e.g., Canva, Adobe tools).

- Voice assistants and IoT (e.g., Siri, Alexa with prompt-based workflows).

Prompt engineering will also feed into multi-modal AI where text, images, audio, and video prompts all combine into richer, more interactive systems. This could power everything from virtual tutors to medical assistants, using natural language as the universal interface.

Bottom line? Prompt engineering is evolving from a niche skill to a foundational layer of the AI-first future.

Prompt Engineering vs Traditional Programming

Key Differences and Overlaps

Traditional programming relies on strict syntax and logic. You write code, compile, debug, and run it. Every action is deliberate and explicit.

Prompt engineering, by contrast, is flexible, conversational, and creative. You describe what you want using natural language and the model interprets and responds based on its training.

Here’s a quick comparison:

- Feature Traditional Programming Prompt Engineering

- Language Programming languages (e.g., Python, Java) Natural language (English, etc.)

- Syntax Strict and structured Flexible and informal

- Learning Curve Steep (requires coding knowledge) Easier for non-tech users

- Output Deterministic Probabilistic (based on model behavior)

Despite differences, overlaps exist. Both require clear logic, problem-solving, and iterative testing. And both benefit from documentation, version control, and performance evaluation.

Prompt engineering doesn’t replace programming it complements it. Developers use prompts to speed up documentation, generate test cases, or create boilerplate code. Non-coders use prompts to tap into powerful AI workflows without learning to code.

Why Prompting Might Be the Future

With AI models becoming more powerful and accessible, natural language will likely become the default interface for digital tools. Prompting bridges the gap between human intent and machine execution. And in a world where speed and adaptability matter, that’s a massive advantage.

We’re already seeing AI-driven design, development, education, and research enabled by prompting. As the tools improve, prompting will shift from novelty to necessity.

Just like Google search taught people to “search smart,” prompt engineering is teaching people to “think like AI.”

Ethical Considerations in Prompt Engineering

Bias, Fairness, and Responsibility

AI models are only as good as the data they’re trained on and unfortunately, that data can include biases. Prompt engineering doesn’t eliminate this risk it amplifies the importance of ethical design.

Let’s say you ask an AI:

“Write a story about a successful entrepreneur.”

If the model always defaults to a male CEO in a tech company, that’s a problem.

As a prompt engineer, you have the power (and responsibility) to guide the AI toward more inclusive, fair, and balanced outputs.

Some ethical practices include:

- Asking explicitly for diverse examples.

- Avoiding prompts that reinforce stereotypes.

- Including disclaimers or fact-checking sensitive content.

- Regularly auditing prompt outputs for bias.

In fields like healthcare, law, and education, ethical prompting is non-negotiable. A biased or misleading prompt could lead to real-world harm.

Transparency and Explainability

Another challenge is explainability. Why did the AI respond that way? What part of the prompt influenced the output?

Prompt engineers must learn to dissect and explain prompt structures especially in high-stakes environments. This improves trust, accountability, and user understanding.

In the future, transparency tools will let users track prompt-response flows, highlight influential words, and suggest alternatives. Until then, the best defense is clear writing and regular testing.

How to Start Learning Prompt Engineering

Beginner Resources and Courses

Ready to dive in? Great. Here are some beginner-friendly resources to start learning prompt engineering:

- OpenAI’s documentation – Free, clear examples on how prompts work.

- LearnPrompting.org – A fantastic beginner course and community resource.

- YouTube channels – Channels like “Prompt Engineering 101” or “AI Explained” break down techniques with visuals.

- Coursera & Udemy courses – Look for courses on prompt design, LLMs, and generative AI tools.

- GitHub repositories – Search for “awesome-prompt-engineering” for curated prompt libraries.

You don’t need to master it all at once. Start small. Try crafting prompts for ChatGPT. Notice how changes in wording shift the tone or format. Keep a journal of successes and failures.

Prompt engineering is a learn-by-doing craft.

| Resource Type | Where to Start | Why It’s Useful |

|---|---|---|

| Official Docs | OpenAI Documentation | Free, clear examples of prompt structure and capabilities. |

| Beginner Courses | LearnPrompting.org | Interactive tutorials and real-world examples for newcomers. |

| Video Learning | YouTube: “Prompt Engineering 101”, “AI Explained” | Visual guides that simplify complex techniques with step-by-step demos. |

| Online Courses | Coursera, Udemy: Search for Prompt Engineering, LLMs, Generative AI | Structured learning with certification options. |

| Code & Prompt Libraries | GitHub: Search awesome-prompt-engineering | Curated examples, tools, and prompt templates to explore and remix. |

Practice Projects and Communities

The best way to learn? Practice.

Try these mini-projects:

- Write a prompt that creates a daily planner.

- Build a chatbot that gives movie recommendations.

- Design a prompt that summarizes research papers.

- Create a role-play prompt for a customer service simulation.

Then join communities like:

- Reddit: r/PromptEngineering, r/ChatGPTPro

- Discord servers for prompt engineers and LLM developers

- Twitter/X communities: Follow #PromptEngineering and #GPTTips

- LinkedIn groups for AI and language model professionals

- Engaging with others accelerates learning. You’ll see what’s trending, share experiments, and avoid common mistakes.

Real-World Examples of Prompt Engineering

Prompt Engineering in Customer Support

Customer support is one of the biggest beneficiaries of prompt engineering. Businesses now use AI bots to handle FAQs, process refunds, and manage returns all using prompts.

A well-crafted prompt might say:

“You’re a support agent. Respond politely to a customer who didn’t receive their order. Offer a free replacement and apologize.”

This sets the role, context, and tone all in one line. With prompt-based bots, businesses reduce ticket volume, improve response time, and scale support without growing headcount.

Advanced systems even generate prompts dynamically based on CRM data, improving personalization.

Prompts Behind Popular AI Tools

You’ve likely used AI tools that rely heavily on prompt engineering without realizing it.

Tools like:

- Jasper AI for marketing content.

- Copy.ai for social captions.

- Notion AI for productivity.

- Synthesia for video generation.

All of them use carefully engineered prompts behind the scenes. Their teams test hundreds of prompt variations to deliver high-quality outputs so the end user never has to.

As more tools go AI-native, the demand for professional prompt engineers will only rise. If you understand how to craft, optimize, and scale prompts you’re sitting on a goldmine skill.

Conclusion

Prompt engineering isn’t just a trend it’s a transformational skill for the AI era. Whether you’re a developer, marketer, educator, or entrepreneur, learning how to communicate with AI through prompts can amplify your productivity, creativity, and problem-solving ability.

From basic inputs to complex chain-of-thought reasoning, prompt engineering lets you mold the power of language models to fit your unique needs. It’s part art, part science, and 100% game-changing.

Start small. Practice daily. Tweak, test, and iterate. And most importantly stay curious. Because in this fast-moving world of AI, those who speak the language of machines will shape the future.

FAQs

Q1: What tools can beginners use to start prompt engineering?

Tools like ChatGPT, OpenAI Playground, Poe, Jasper AI, and PromptHero are beginner-friendly platforms where you can practice prompt crafting, test ideas, and learn from examples. They offer intuitive interfaces and support for both casual and advanced use.

Q2: How is prompt engineering different from fine-tuning an AI model?

Prompt engineering involves crafting smart, targeted inputs to get better responses from existing AI models. Fine-tuning, on the other hand, means training the model itself using your own dataset, which requires more technical knowledge and computing power.

Q3: Can prompt engineering be used in non-tech fields?

Absolutely! Prompt engineering is now a key skill in marketing, journalism, education, customer service, therapy, HR, and lawessentially anywhere language and automation meet. It’s not just a tech skill; it’s a modern communication tool.

Q4: How long does it take to get good at prompt engineering?

Most people become proficient within a few weeks of regular practice. The secret is daily experimentation, learning from failed prompts, and analyzing what types of inputs yield the best outputs for different tasks.

Q5: What are some good communities or forums for learning prompt engineering?

Some top communities include:

- r/PromptEngineering on Reddit

- LearnPrompting.org

- Discord AI-focused servers

- Twitter/X hashtags like #PromptTips

- GitHub repositories featuring curated prompts and prompt libraries